Multi-Turn Chatbot

Overview of implementing multi-turn chatbots using Freeplay.

Introduction

Many LLM applications involve more than just one-off, isolated LLM completions. For chatbots especially, they consist of multiple back-and-forth exchanges between a user and assistant. This makes chatbots unique to test and evaluate.

This document walks through how to use Freeplay to build, test, review logs, and capture feedback on multi-turn chatbots, including how to make use of a special history object:

- Defining

historyin prompt templates - Managing

historywith the Freeplay SDK - Recording and viewing chat turns in Freeplay as traces

- Managing datasets, configuring evals and automating tests that include

history

In this document, we will refer to one back-and-forth exchange between the user and the assistant as a "turn".

Understanding History for Chatbots

First, why does history matter when building a multi-turn chatbot?

Importantly, each exchange must be aware of all the previous exchanges in the conversation — aka the "history" — such that the LLM can give an answer that is contextually aware. Experimentation and testing with multi-turn chat must also take history into account, since any simulated test cases need to include relevant context.

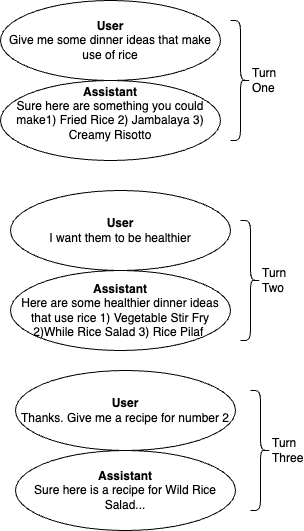

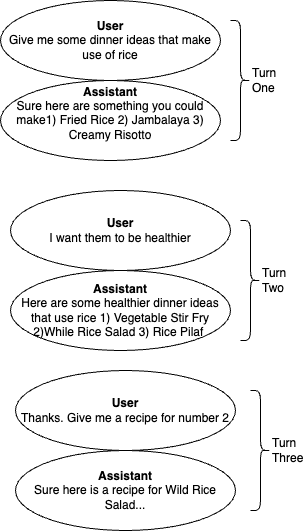

Consider this series of exchanges between the user and assistant:

In Turn Two, the assistant needs to have the context from the previous turn to give a reasonable answer. "I want them to be healthier" is the user's request for healthy dinner ideas that make use of rice.

By Turn Three, the assistant needs to reference Turn 2 to know what “Give me a recipe for number 2” refers to. And so forth.

Without an understanding of the context from the conversation history, each new message would be impossible to interpret.

Note: While chatbots are the most common UX that uses this interaction pattern, it can apply more broadly. It can be helpful to think of

historyas a way to manage state or memory, since the LLM itself does not store any persistent context from one interaction to the next. Nothing restricts the use of these concepts to a chatbot UX.

Using Freeplay with Multi-Turn Chatbots

What's different about using Freeplay with a chatbot? There are a couple important things to be aware of:

- Prompt Templates: You'll define a special

historyobject in a prompt template allowing you to pass conversation history at the right point. - Recording Multi-Turn Sessions: You'll record

historywith each new chatbot turn, as well as record messages at the start and end of each trace to make it easy to view theinputandoutput(see Traces documentation). - Managing Datasets & Testing: You'll curate datasets that contain

historyso you can simulate accurate conversation scenarios when testing. - Configuring Auto-Evaluations: If you're using model-graded evals, you'll be able able to target

historyobjects for realtime monitoring or test scenarios.

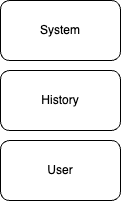

History in Prompt Templates

History should be configured within your Prompt Templates in Freeplay.When configuring your Prompt Template, you will add a message of type

historywherever your history messages should be inserted. This tells Freeplay how messages should be ordered when the prompt template is formatted.

The most common configuration would look like this:

Creating this configuration on a Freeplay prompt template would look like this:

This tells Freeplay to insert the history messages in between the system message and the most recent user message when formatting a prompt.

You must define history in a prompt template before you can pass history values at record time and have them saved properly for use in datasets, testing, etc.

Why configure history explicitly in the prompt template?

While it may seem redundant at first to explicitly configure the placement of history, it allows for the support of more varied prompting patterns. For example, you may have some predefined context that you use to seed the model each time and include multiple messages in a prompt template. In that case, a prompt template could look like the following:

This would tell Freeplay to insert history messages after the first Assistant/User pair, rather than directly after the System message.

Multi-Turn Chat in Logging and Observability

Freeplay makes it easy to understand complex, multi-turn conversations in your LLM applications. Using Traces, you can log conversations with clear input/output pairs that mirror what your users actually see—even when multiple prompts and LLM calls are happening behind the scenes.

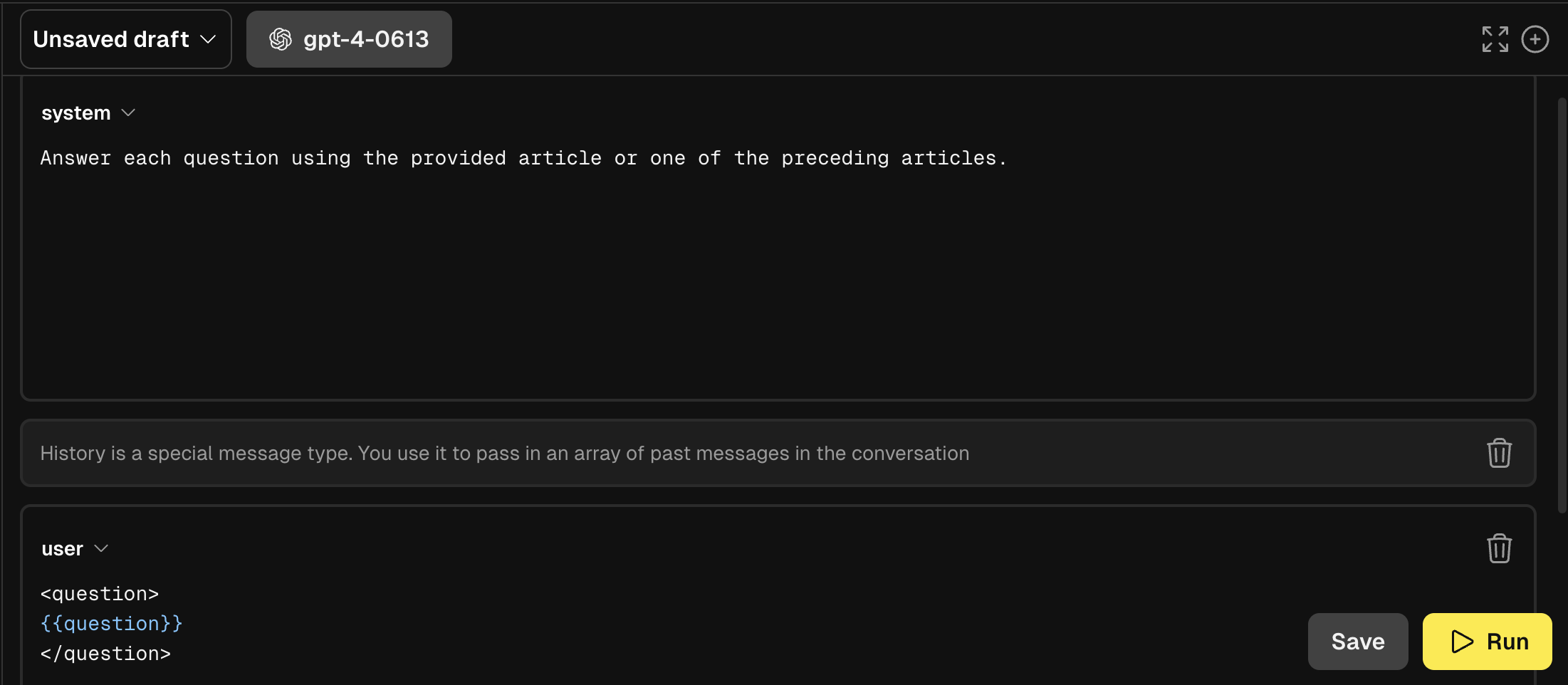

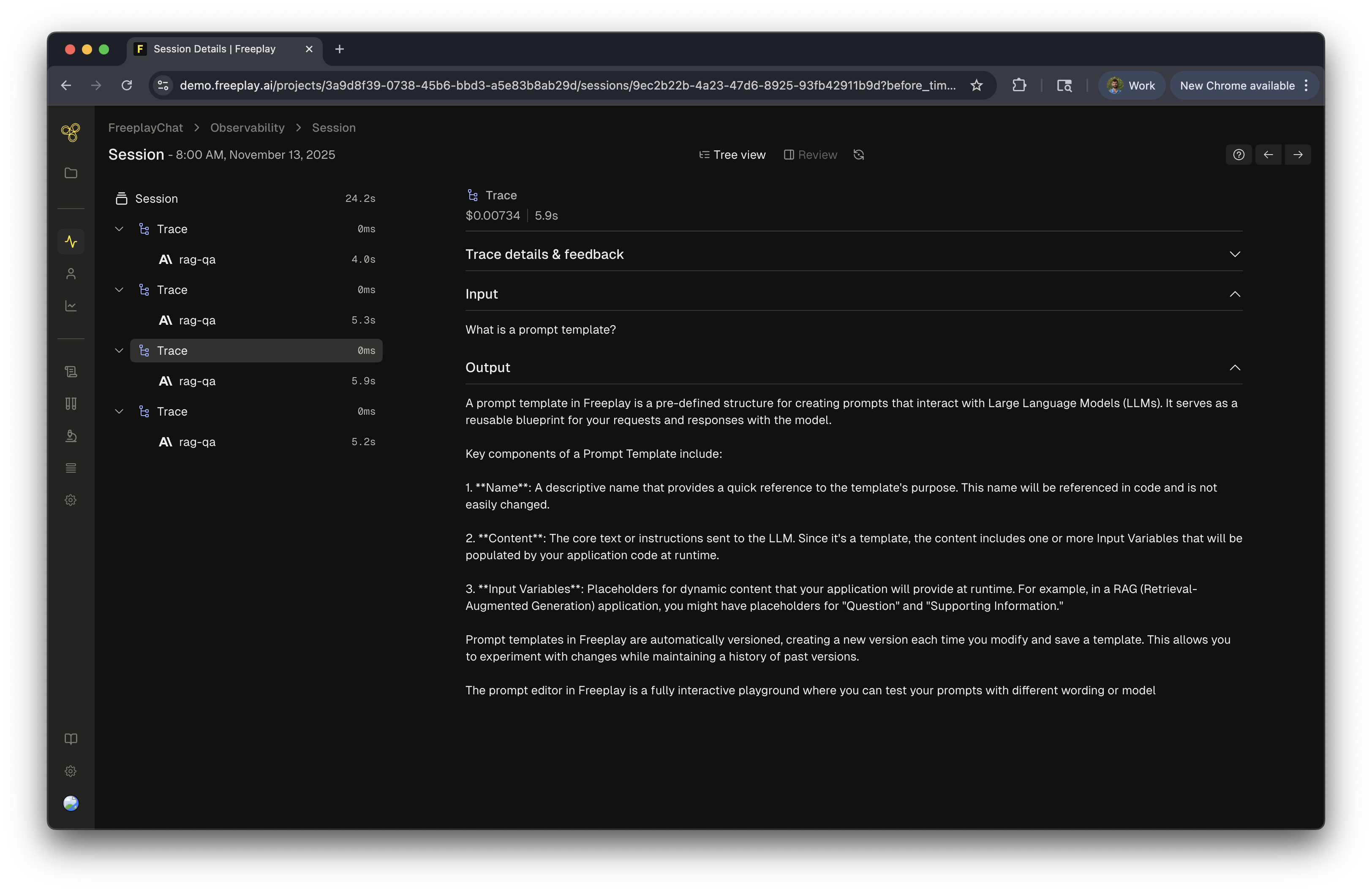

Understanding Session and Trace Views

When you navigate to a Session in Freeplay's Observability dashboard, you'll see the conversation from your user's perspective. Each trace represents a single turn in the conversation—the user's question and the AI's response.

In the left sidebar, you can see the conversation structure: a Session containing multiple Traces, each with their underlying completions. The main view shows the user-facing input and output, making it easy to understand the conversation flow at a glance.

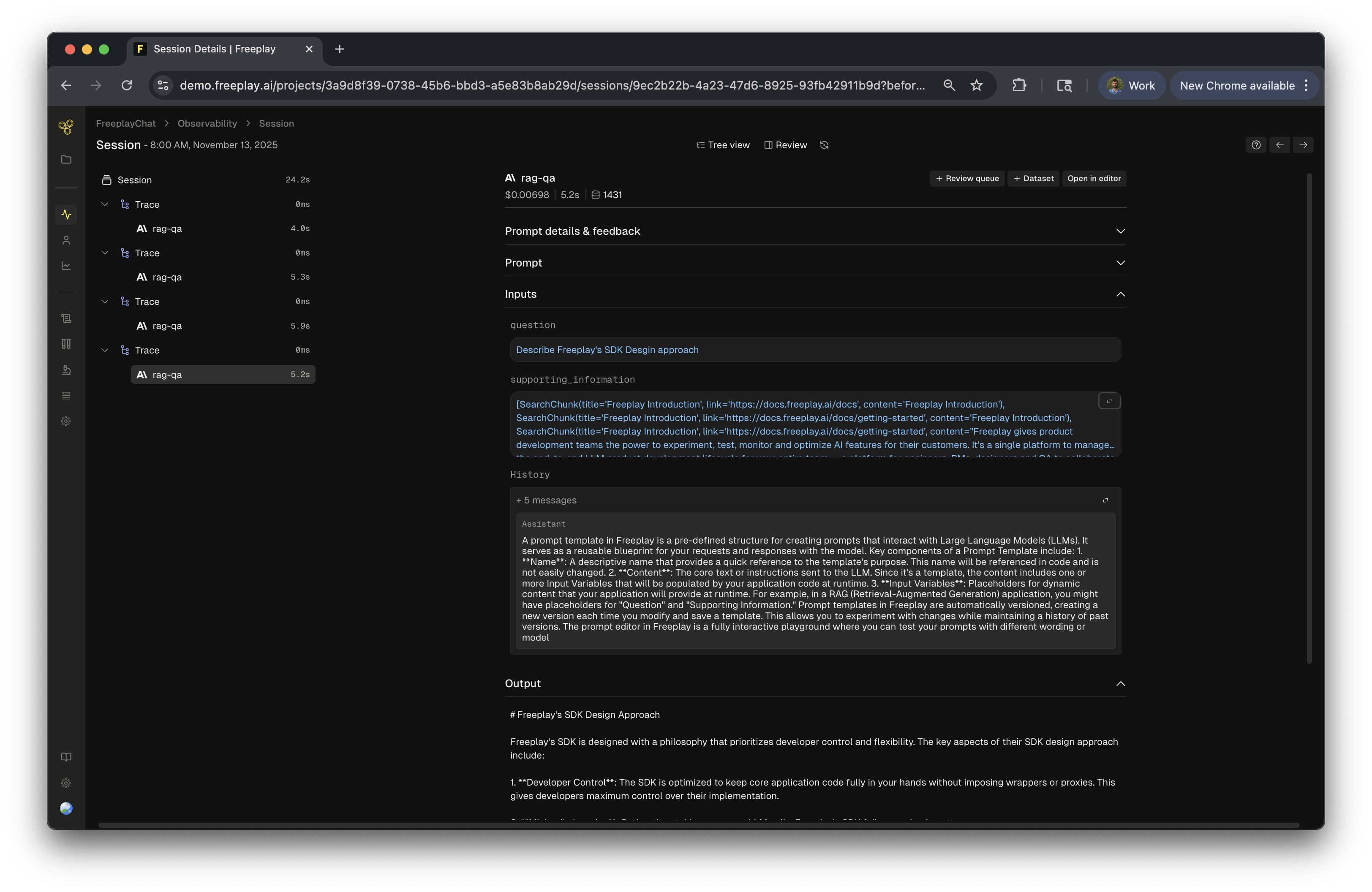

Inspecting What's Happening Under the Hood

Click on any trace to see what's actually happening behind that single user interaction. Often, a single user-facing response involves multiple LLM calls—like retrieval, reasoning, and generation steps.

The trace detail view shows you all the completions that contributed to this response. In this example, two prompts were called to answer the user's question about prompt bundling. You can see the input, output, timing, and cost for the entire trace.

Diving into Individual Completions

Click on any completion within a trace to examine it in detail. Here you can see the full prompt, the model's response, inputs, and the conversation history. All of this granular information gives you the most context you need to perform analysis and review the conversation.

Each completion can be added to a dataset for testing, opened in the prompt editor, or added to a review queue for human evaluation. Any customer feedback logged at the completion level automatically rolls up to the trace level, making it easy to spot which user interactions need review.

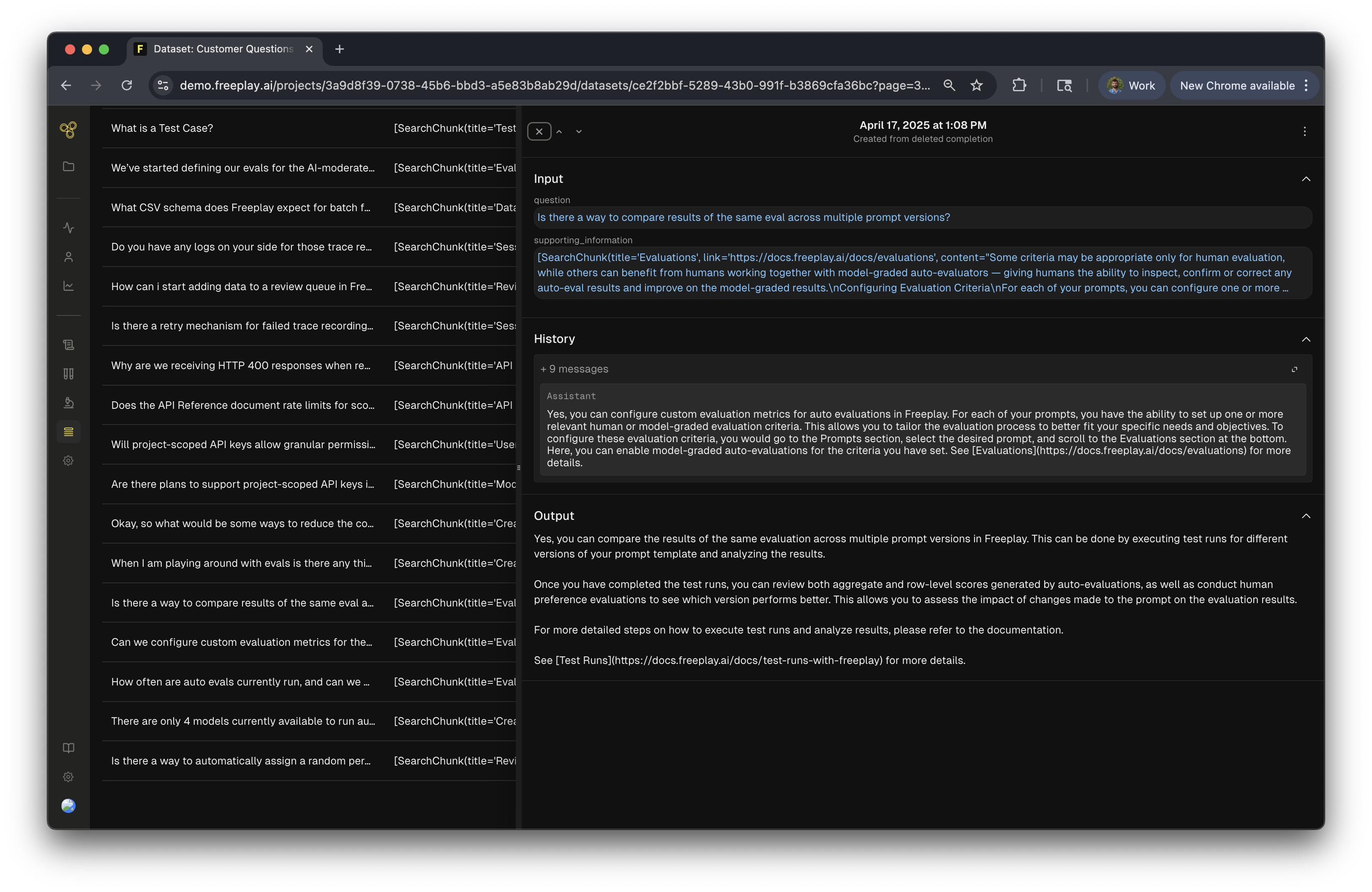

Multi-Turn Chat Testing

Save and modifyhistoryas part of datasets to simulate real conversations.Whenever you save an observed conversation turn that includes

history, it will be included in the dataset for future testing. You can also edit or addhistoryobjects to a dataset at any time in case you want to control exactly what goes into a test scenario. Auto-evals can targethistoryas well for faster test analysis.

Datasets and Test Runs

When building a chatbot, the testing unit remains at the Completion level but includes history when relevant. Consider this example again:

If we were to save the completion that generates Turn Two to a dataset, we would also get the preceding context from Turn One, which would exist in the history object for the new completion.

Subsequent Test Runs using that Test Case would treat Turn One as static, meaning it is not recomputed during the Test Run. It would be passed as context when Turn Two is regenerated so that you can simulate that exact point in the conversation when testing.

Here's a simple sample dataset row that includes several messages in the history object.

Auto Evaluations

History can be targeted in model-graded auto-evaluation templates like any other variable using the{{history}} parameter. This allows you to ask questions like: Is the current output factually accurate given the preceding context?

Determine whether or not the output is factually consistent with the preceding context

The output should be deemed inaccurate if it constains any logical contradictions with

the preceding context

<Output>

{{output}}

</Output>

<Preceding Context>

{{history}}

</Preceding Context>Multi-Turn Chat in the SDK

When formatting your prompts you will pass the previous messages as an array to the history parameter. The messages object will have the history messages inserted in the right place in the array, as defined in your prompt template. See more details in our SDK docs here.

previous_messages = [{"role": "user": "what are some dinner ideas...",

"role": "assitant": "here are some dinner ideas..."}]

prompt_vars = {"question": "how do I make them healthier?"}

formatted_prompt = fpClient.prompts.get_formatted(

project_id=project_id,

template_name="SamplePrompt",

environment="latest",

variables=prompt_vars,

history=previous_messages # pass the history messages here

)

print(formatted_prompt.messages)

# output:

[

{'role': 'system', 'content': 'You are a polite assitant...'},

{'role': 'user', 'content': 'what are some dinner ideas...'},

{'role': 'assistant', 'content': 'here are some dinner ideas...'},

{'role': 'user', 'content': 'how do I make them healthier?'}

]// Call LLM Function

async function callLLM(input, history, sessionInfo) {

// LLM Call occurs here....

// Record the interaction to Freeplay

await freeplay.recordings.create({

projectId,

allMessages: [...history, responseMessage], // History is passed to capture the conversation history.

inputs: { input },

sessionInfo: sessionInfo,

promptInfo: formattedPrompt.promptInfo,

callInfo: getCallInfo(formattedPrompt.promptInfo, start, end),

responseInfo: {

isComplete: true,

},

});

return responseMessage;

}

// Multi-turn chat

// Create a new session

const session = freeplay.sessions.create({

customMetadata: { conversation_topic: "home repair" },

});

const sessionInfo = getSessionInfo(session);

// Initialize conversation history

let history = [

{

role: "user",

content: "Why isn't my sink working?",

},

];

// First interaction

console.log("User: Why isn't my sink working?");

const response1 = await callLLM("", history, sessionInfo);

// Update history with the assistant's response

history.push(response1);

// Second interaction - continue the conversation

history.push({

role: "user",

content: "Tell me more about checking the P-trap",

});

console.log("User: Tell me more about checking the P-trap");

const response2 = await callLLM("", history, sessionInfo);

// Update history again

history.push(response2);

// Third interaction

history.push({

role: "user",

content: "What tools do I need for this job?",

});

const response3 = await callLLM("", history, sessionInfo);

// In a real application, you would fetch messages from the backend

// Here we're just using the history we've built up

// New follow-up question in the restored session

history.push(response3);

history.push({

role: "user",

content: "How long should this repair take?",

});

const response4 = await callLLM("", history, sessionInfo);

}You can then use that prompt and messages to make a call to your LLM provider:

s = time.time()

chat_response = openaiClient.chat.completions.create(

model=formatted_prompt.prompt_info.model,

messages=formatted_prompt.messages,

**formatted_prompt.prompt_info.model_parameters

)

e = time.time()

latest_message = chat_response.choices[0].messageYou will then pass the full set of messages back to Freeplay on the record call:

all_messages = [...formatted_prompt.messages, latest_message]

# record the call

payload = RecordPayload(

project_id=project_id,

all_messages=all_messages,

inputs=prompt_vars,

session_info=session,

prompt_version_info=formatted_prompt.prompt_info,

call_info=CallInfo.from_prompt_info(formatted_prompt.prompt_info, start_time=s, end_time=e),

response_info=ResponseInfo(

is_complete=chat_response.choices[0].finish_reason == 'stop'

)

)

completion_info = fpClient.recordings.create(payload)You can then repeat that pattern with each turn in the conversation continuing to append to and update the all_messages object.

An end to end code recipe can be found here.

Updated 23 days ago

Now that you're well-versed on building multi-turn chatbots using the history object, let's learn about model and key management.