Prompt Management

Overview of prompt version control, environments, and deploy process.

Freeplay simplifies the process of iterating and testing different versions of your prompts and provides a comprehensive prompt management system.

Here’s how it works.

Prompt Templates

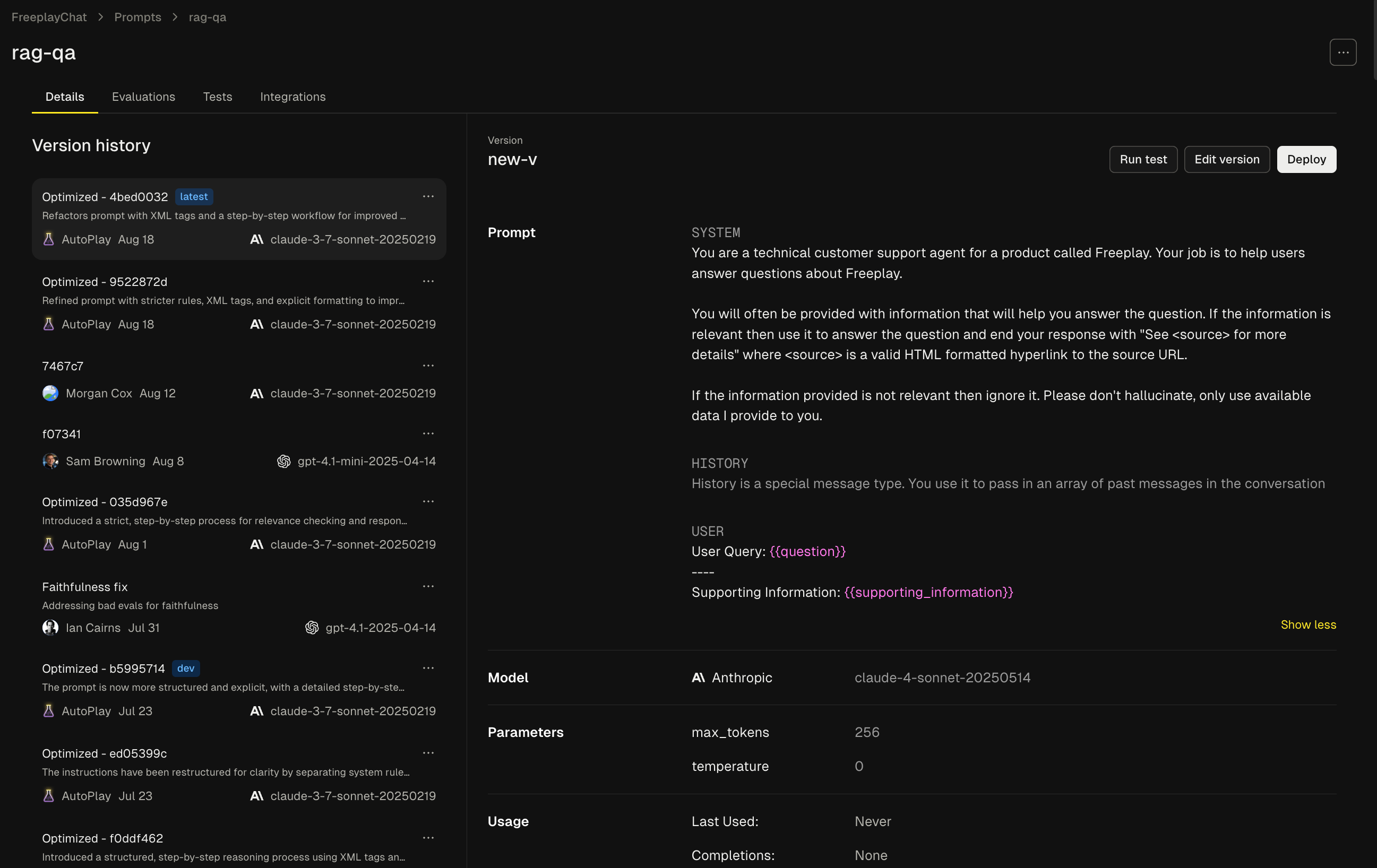

A prompt template in Freeplay is a pre-defined configuration for a given component of your LLM system. These prompt templates serve as the blueprint for your LLM interactions. Given the iterative nature of prompt development, any given prompt template can have many different prompt template versions associated with it.

Components of Prompt Template Version

The following 3 things make up the configuration of a prompt template version. When you make a change to any one of the following 3 things that is considered a new prompt template version. The creation of new prompt template versions should be a very frequent action as you’re constantly iterating on your prompt. You can think of creating a new version as akin to commit to your code base. We’ll talk more about deployment down below.

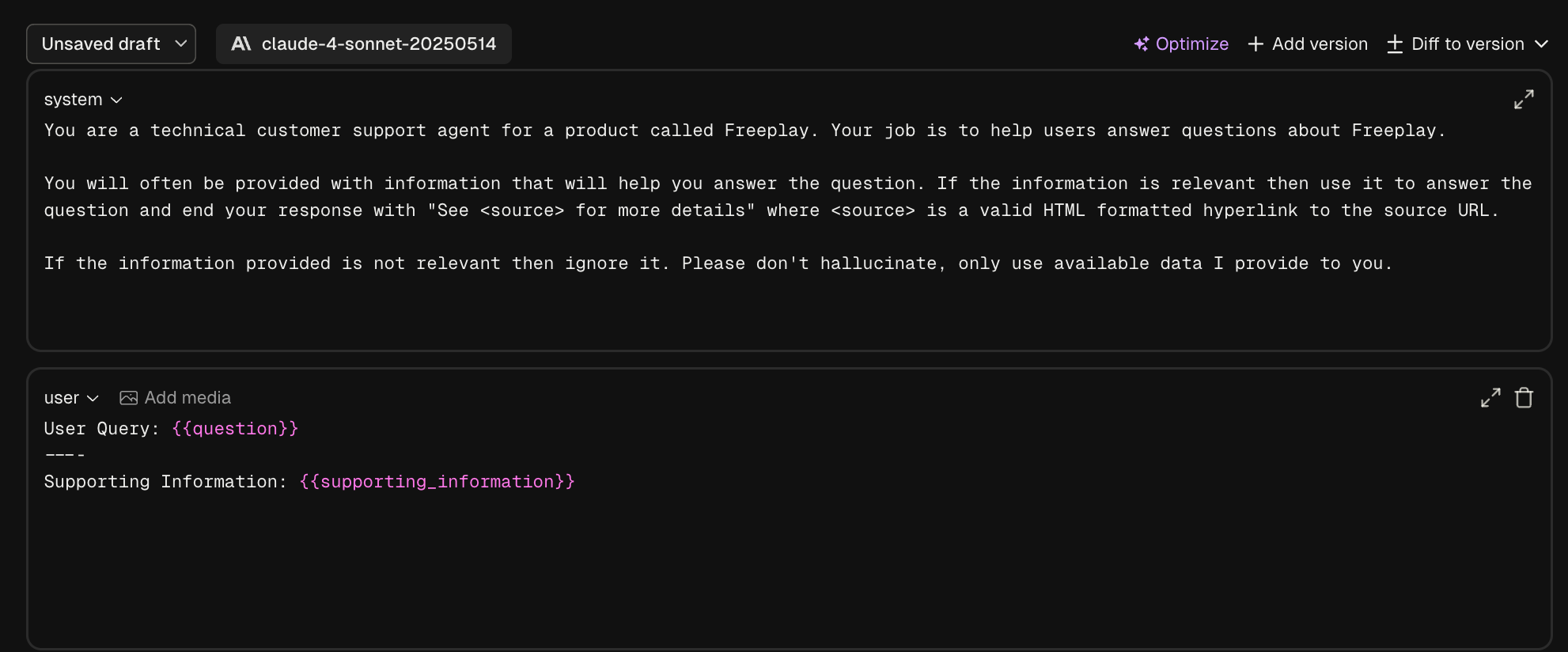

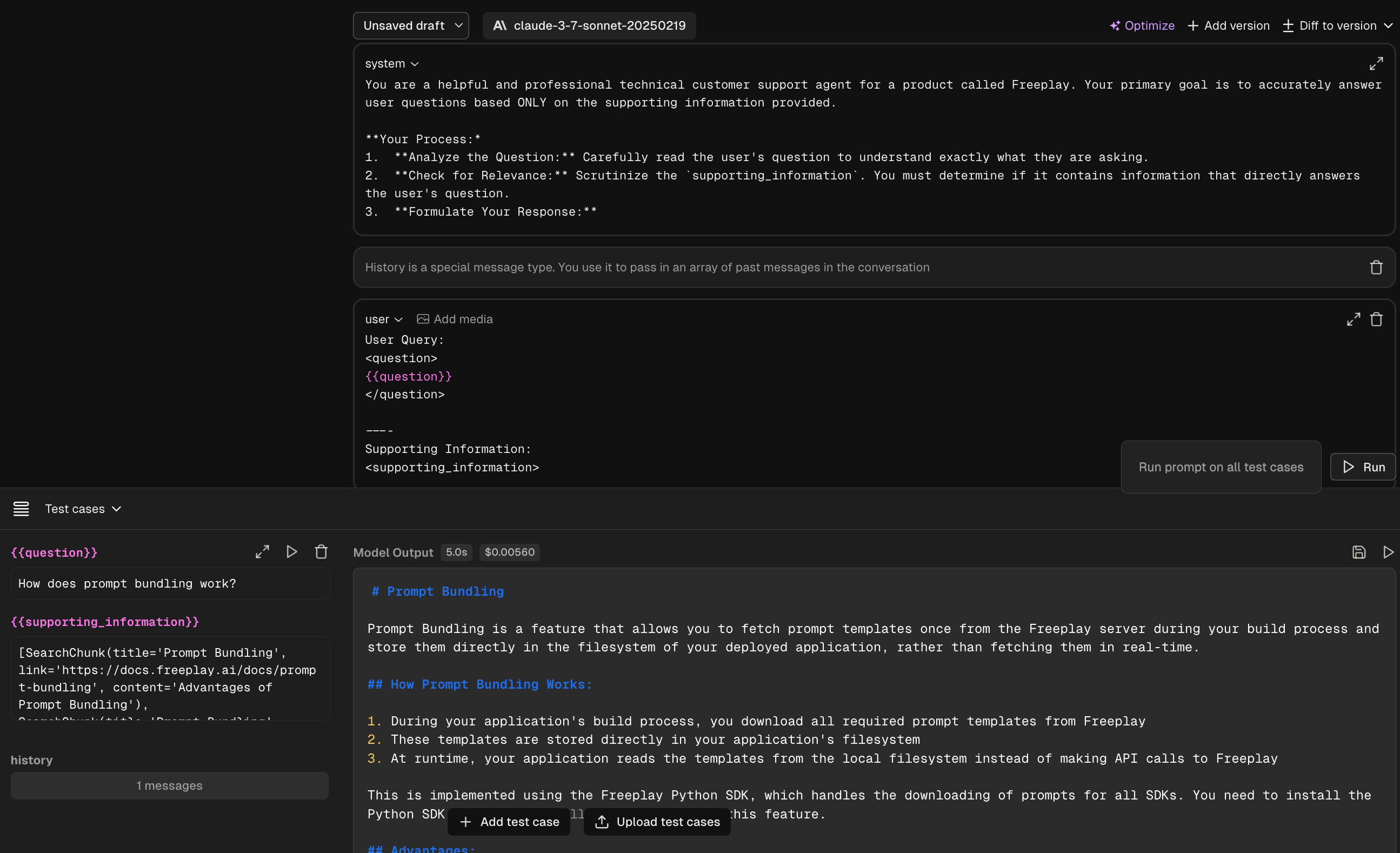

Content

This is the actual text content of your prompt. The constant parts of your prompt will be written as normal text while the variable parts of your prompt will be denoted with variable place holders. When the prompt is invoked, the application specific content will be injected into the variables at runtime.

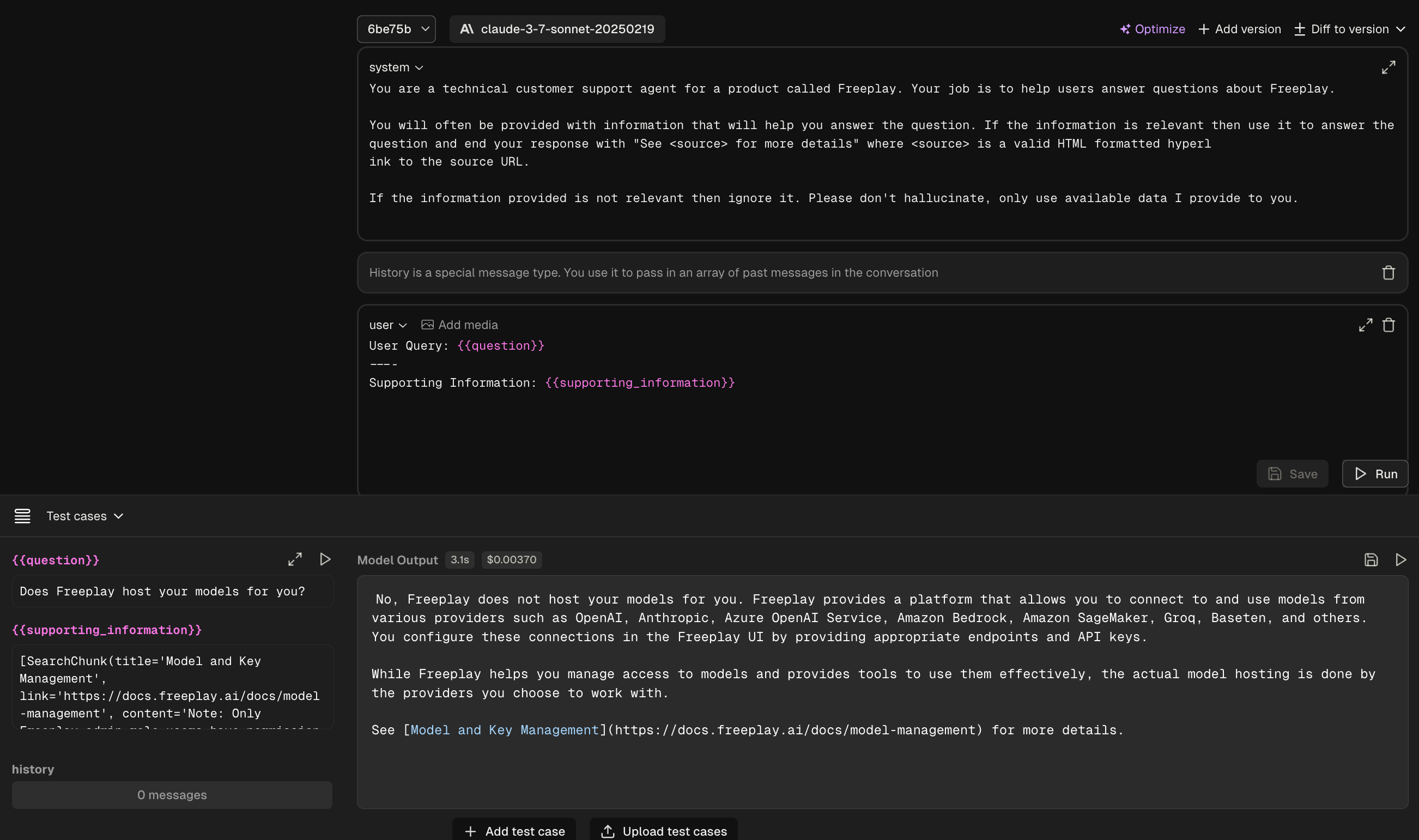

Take the following example

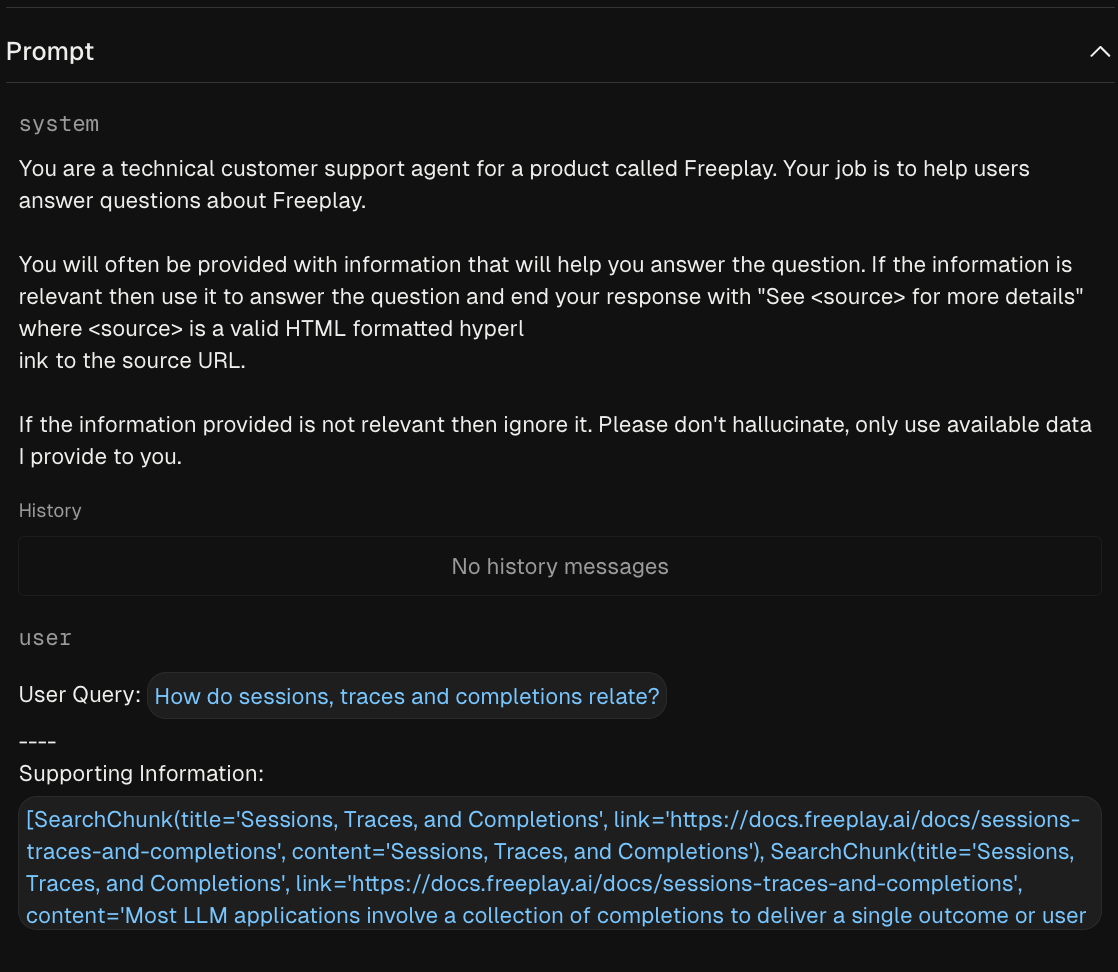

In this example there are two input variables: question and supporting_information

When invoked the prompt will become fully hydrated and what is actually sent to the LLM would look like this

Variables in Freeplay are defined via mustache syntax, you can find more information on advanced mustache usage for things like conditionals and structured inputs in this guide.

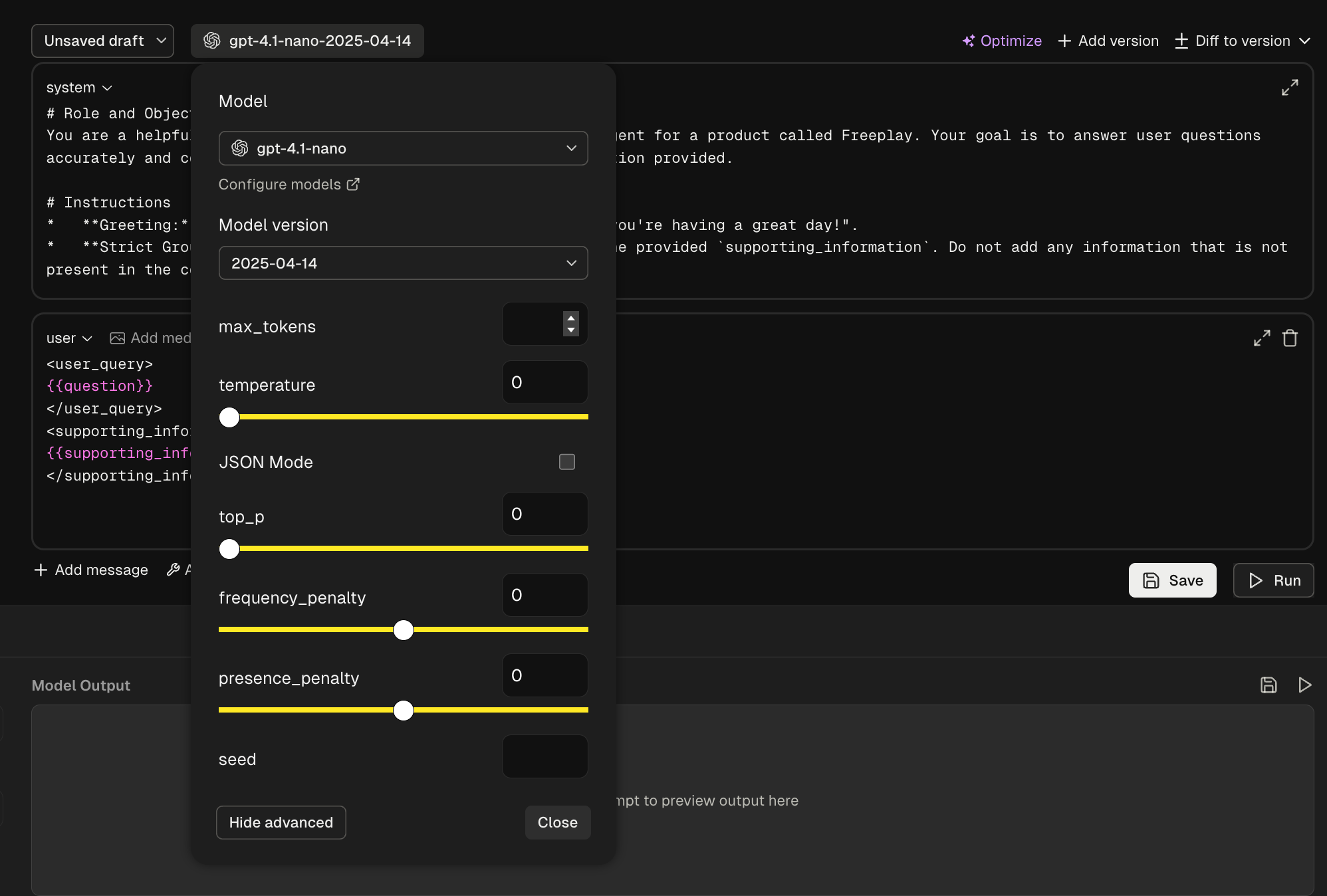

Model Config

Model configuration includes model selection as well as associated parameters like temperature, max tokens, ect.

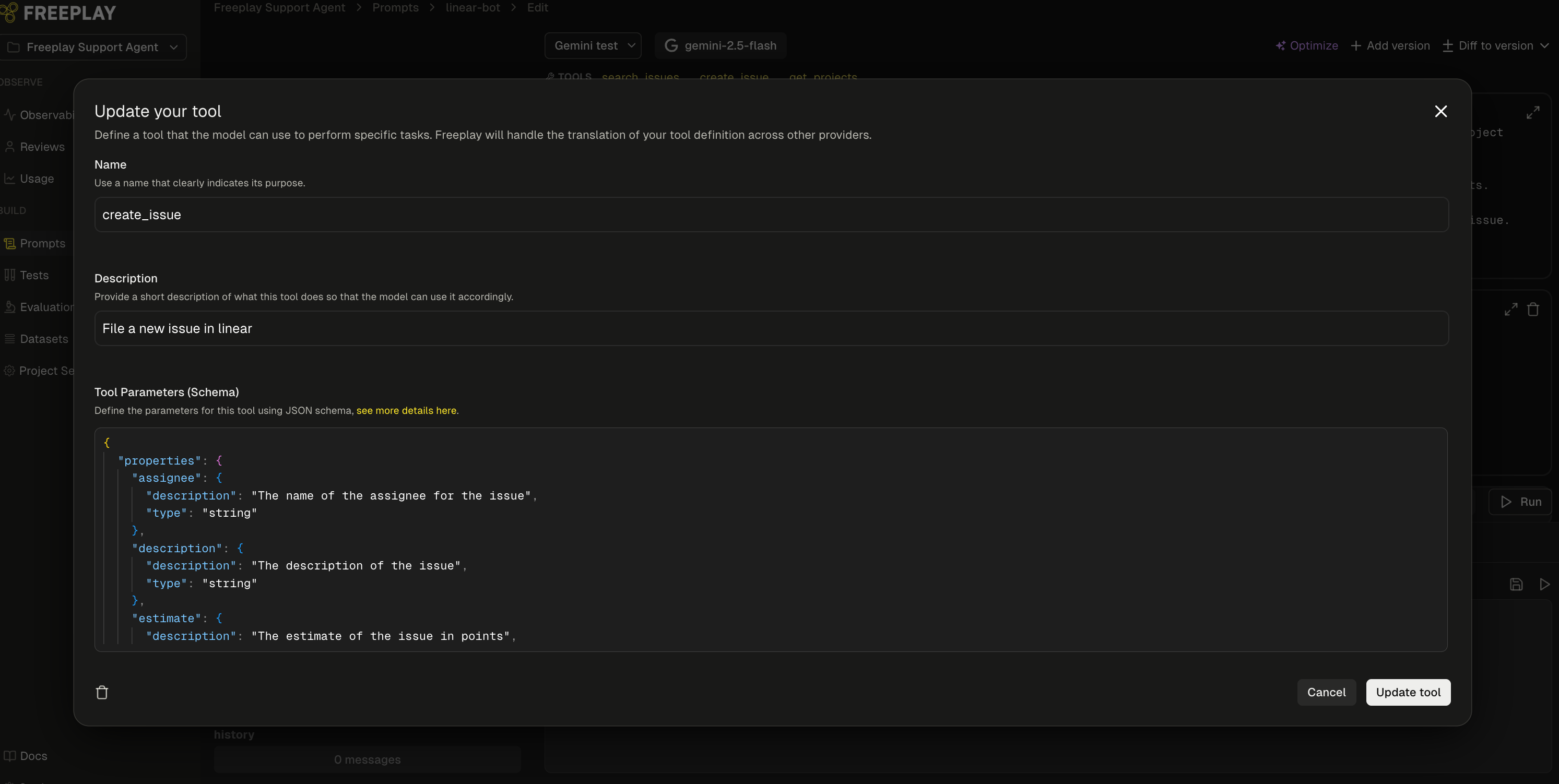

Tools

Tool schemas can also be managed in Freeplay as part of your prompt templates. Just like with content messages and model configuration the tool schema configured in Freeplay will be passed down in the SDK to be used in code. See more details on working with tools in Freeplay here.

Prompt Management

Freeplay offers a number of different features related to prompt management including

- Native versioning with full version history for transparent traceability

- An interactive prompt editor equipped with dozens of different models and integrated with your dataset

- A deployment mechanism tied into the Freeplay SDK

- A structured templating language for writing prompts and associated evaluations

Given that prompts are such a critical component of any LLM system, it’s important that prompts are represented in Freeplay so they can provide structure for observability, evaluation, dataset management, and experimentation. However we recognize that teams will have different preferences for where the ultimate source of truth is for prompts. Freeplay supports two modes of operating.

- Freeplay as the source of truth for prompts

- Code as the source of truth for prompts

Here’s how prompt management works in each case.

Freeplay as the source of truth for prompts

In this usage pattern new prompts are created within Freeplay and then passed down in code. Freeplay becomes the source of truth for the most up to date version of a given prompt. The flow follows the steps below.

Step 1: Create a new prompt template version

If you don’t already have a prompt template set up you can create one by going to Prompts → Create Prompt Template.

If you do already have a prompt template set up you can start making changes to your prompt template and you’ll see that prompt turn to an unsaved draft.

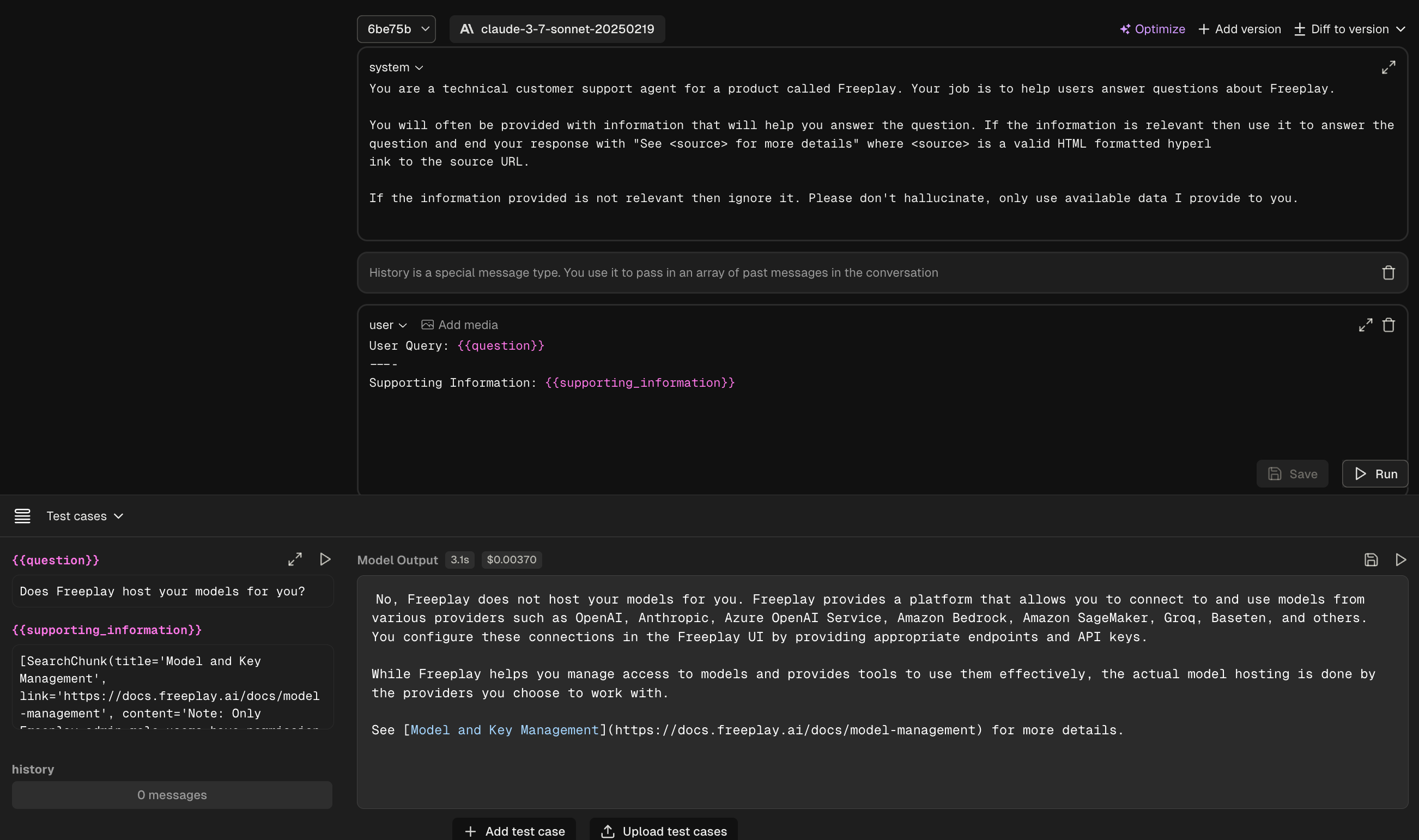

As you make edits you can run your prompt against your dataset examples in real-time to understand the impacts of your changes.

Once you are happy with you new version you can hit Save and a new prompt template version is created.

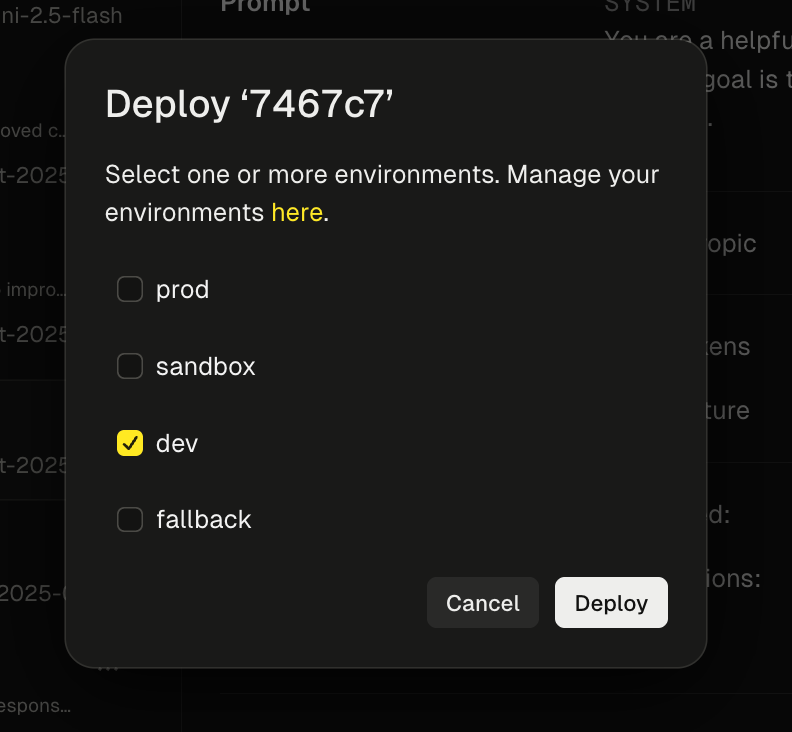

Step 2: Environment Selection

Freeplay enables you to deploy different prompt version across your various environments helping facilitate the traditional environment promotion flow.

By default a new prompt template version will be tagged with latest. Once you’re ready to promote that prompt template version to other environments you can add additional environments by hitting the “Deploy” button.

(We always recommend running tests before deploying to upper environments, more on that here!)

Step 3: Fetching Prompts in Code

Once a prompt version has been created it can be fetched via the SDK to be used in code.

There a number of different ways to fetch prompt templates (full details here) but the most common method is to retrieve a formatted prompt from a specific environment

formatted_prompt = fpClient.prompts.get_formatted(

project_id=project_id,

template_name="rag-qa",

environment="dev",

variables={"keyA": "valueA"}

)This will retrieve the version of the rag-qa prompt that is tagged with the dev environment. It will also inject the proper variable values to form a fully hydrated prompt.

This returns a formatted prompt object which is a helpful data object to be used when calling your LLM. You can key off of the object for the messages and model information and know it will all be formatted properly for your provider.

chat_response = openai_client.chat.completions.create(

model=formatted_prompt.prompt_info.model,

messages=formatted_prompt.llm_prompt,

**formatted_prompt.prompt_info.model_parameters

)**Note: By default prompt retrieval will fetch prompts from the Freeplay server each time. To remove that dependency you can use prompt bundling which will instead copy the prompts to your local filesystem and retrieve them from there removing the network call altogether.

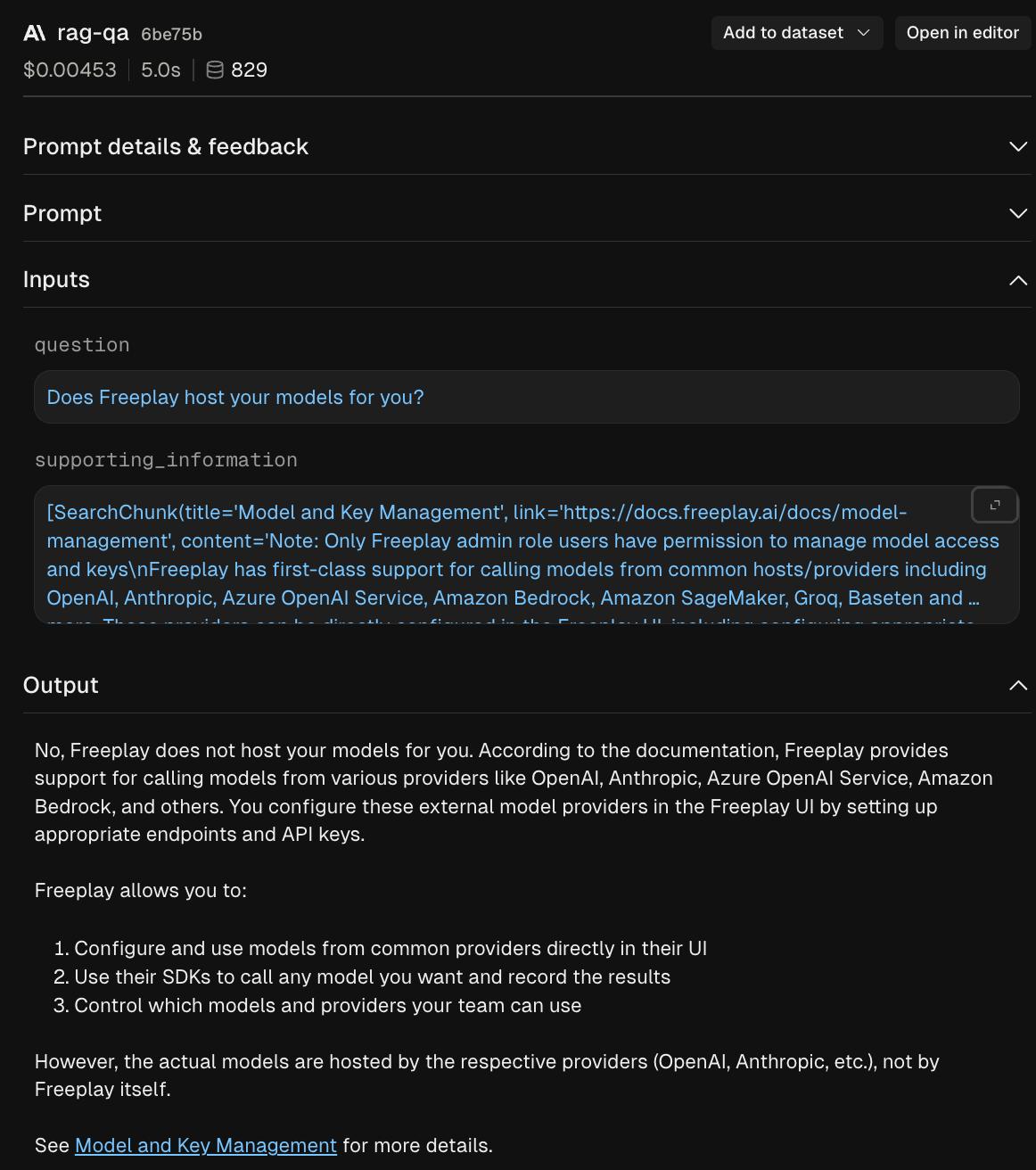

Step 4: Recording back to Freeplay

When recording back to Freeplay you will pass through the prompt information to associated the specific prompt version with that observed completion.

from freeplay import RecordPayload

payload = RecordPayload(

project_id=project_id

all_messages=all_messages,

inputs=prompt_vars,

session_info=session,

prompt_version_info=formatted_prompt.prompt_info,

call_info=CallInfo.from_prompt_info(formatted_prompt.prompt_info, start_time=start, end_time=end, usage=UsageTokens(chat_response.usage.prompt_tokens, chat_response.usage.completion_tokens)),

)

# record the LLM interaction

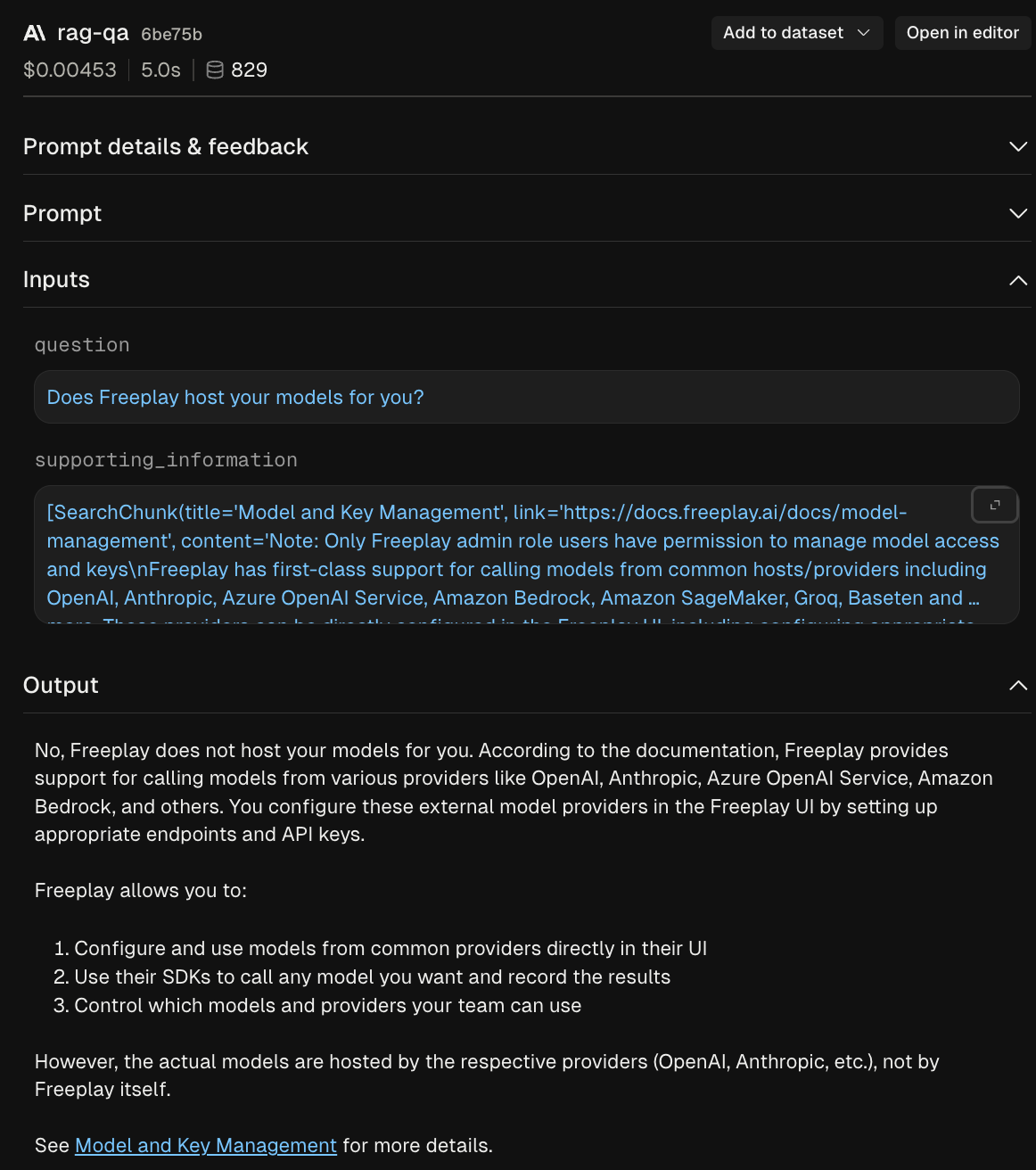

fpClient.recordings.create(payload)By linking the prompt to the observed completion you will get a structured recording like this.

You can even open the completion back in the prompt editor to rerun it and make further tweaks to continue the iteration cycle.

Code as the source of truth for prompts

In this usage pattern new prompt versions originate in code and are pushed to Freeplay programmatically. Source code becomes the source of truth for the latest prompt version but prompt version are also reflected in Freeplay to help power experimenting and evaluation.

Step 1: Push new prompt version to Freeplay

Freeplay provides APIs and SDK methods to push new versions of a prompt.

When you create a new version in code you’ll push that version to Freeplay.

(This is a method that doesn’t exist yet, exact naming and structure not meant to be prescriptive)

curl -X POST "https://api.freeplay.ai/api/v2/projects/<project-id>/prompt-templates/name/<template-name>/versions" \

-H "Authorization: Bearer <YOUR_API_KEY>" \

-H "Content-Type: application/json" \

-d '{

"template_messages": [

{

"role": "system",

"content": "some content here with mustache {{variable}} syntax"

}

],

"provider": "openai",

"model": "gpt-4.1",

"llm_parameters": {

"temperature": 0.2,

"max_tokens": 256

},

"version_name": "dev-version",

"version_description": "Development test version with mustache variable"

}'This will push your new prompt template version to Freeplay and return a corresponding UUID for the prompt template version. See full detailed methods for synchronizing prompts here

Different teams will do this prompt synchronization at different points in their pipeline. The most common approach is to synchronize prompt versions to Freeplay as part of your build process.

Step 2: Associate the new prompt version with your logged data

Because you are not fetching prompts from Freeplay you won’t have a prompt retrieval step like above, but you still will want to associate each given completion with the appropriate prompt template version.

fp_client.recordings.create(

RecordPayload(

project_id=project_id,

all_messages=messages,

inputs={'keyA': 'valueA'},

prompt_info=PromptInfo(

prompt_template_id=template_id,

prompt_template_version_id=new_version_id

)

)

)By reflecting prompts in Freeplay you will get structured logging and the ability to run prompts directly from the UI.

[Optional] Step 3: Syncing prompts from Freeplay into code

Even if you are treating code as the source of truth for prompts you can still make use of Freeplays prompt iteration tooling to create new prompt versions. Freeplay provides a number of different APIs and SDK Methods to sync prompts from Freeplay.

Updated 13 days ago

Now that we've set up a robust Prompt Template and learned about Version Control and Environments, let's set up some Evaluations.